Golang is gaining more and more popularity among programmers as well as with sys admins. There are variety of reasons for this. For example, its every easy to spin up user level threads aka go routines, channels are one interesting stuff that can be used to communicate between multiple go routines. GO has a very effective schedular that can use multiple cores if available on the running machine. Go is pretty much popular on building webservers too. There are many popular GO based opensource webservers like caddyserver, traefik (pretty much useful if there are microservices or service discovery involved).

import “net/http”

Go comes with an inbuilt http library provided by net/http package. We can use this native http package to build the web server. Even the latest http package natively supports reverse proxy with some primitives. The net/http package provides two simple functions, ListenAndServe for spinning up an http server and ListenAndServeTls for https server. Let see how the ListenAndServe works in the net/http package.

The documentation says the function takes a string argument for listen address and an http handler (for handling the routes).

func ListenAndServe(addr string, handler Handler) error

ooc, i decided to check what the Handler type is. From the package source, it seems to be an interface type that exposes a ServeHTTP() method

type Handler interface {

ServeHTTP(ResponseWriter, *Request)

}

Which means i can simply use a struct that exposes a ServeHTTP() method in place of handler, a magick of GO’s Interfaces :). Now lets see what ListenAndServe() method is actually doing.

func ListenAndServe(addr string, handler Handler) error {

server := &Server{Addr: addr, Handler: handler}

return server.ListenAndServe()

}

So ListenAndServe is using the address and handler params to create a Server struct and then calls its ListenAndServe() method which inturn creates the tcp listener for the http server.

Ok now we know how GO starts the listening socket. Let’s try creating simple webserver. The documentation page for net/http package provides some simple examples. Lets look into a very basic one.

package main

import (

"fmt"

"net/http"

)

func testHandler(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World"))

return

}

func main() {

http.HandleFunc("/", testHandler)

if err := http.ListenAndServe(":8080", nil); err != nil {

fmt.Println("Stopping web server :%v", err)

}

}

This will spin up a webserver listening on port 8080 and serve a “Hello World” for the root url. Let’s run the code and see.

# run the file from a shell

$ go run simple.go

# now run the curl commmand from a different shell

$ curl localhost:8080/

Hello World

The webserver is working fine. But now im curios on one thing. We are not passing any handler params, instead the script is calling the http.HandleFunc() before calling the ListenAndServe(). So i decided to see how library detects the handler eventhough we are passing nil as the handler to the ListenAndServe(). We already knows that ListenAndServe() uses ListenAndServe() method from the Server struct. Let see what it really does.

func (srv *Server) ListenAndServe() error {

addr := srv.Addr

if addr == "" {

addr = ":http"

}

ln, err := net.Listen("tcp", addr)

if err != nil {

return err

}

return srv.Serve(tcpKeepAliveListener{ln.(*net.TCPListener)})

}

So the ListenAndServe creates a tcp socket for the listen address that we are passing and then its calling the Serve() method. Following the code flow, it seems the Serve() then calls newConn() method. The newConn() returns a private struct called conn. Below is the code for the same in the http package.

func (srv *Server) newConn(rwc net.Conn) *conn {

c := &conn{

server: srv,

rwc: rwc,

}

if debugServerConnections {

c.rwc = newLoggingConn("server", c.rwc)

}

return c

}

func (srv *Server) Serve(l net.Listener) error {

....

....

for {

rw, e := l.Accept()

...

...

c := srv.newConn(rw)

c.setState(c.rwc, StateNew) // before Serve can return

go c.serve(ctx)

}

}

So from the the above code, it seems go runs an infinite for loop accepting new incoming connections. Then it creates a conn struct for each incoming connection and then calls the serve() method from the conn struct with a go routine.

But this doesnt’t solve my question on how it handles the nil handler and how it auto detects the handler function that i’ve initialized via the http.HandlerFunc() function. So the only option is to follow the flow and see how the serve() method handles the connections.

type serverHandler struct {

srv *Server

}

func (sh serverHandler) ServeHTTP(rw ResponseWriter, req *Request) {

handler := sh.srv.Handler

if handler == nil {

handler = DefaultServeMux

}

if req.RequestURI == "*" && req.Method == "OPTIONS" {

handler = globalOptionsHandler{}

}

handler.ServeHTTP(rw, req)

}

func (c *conn) serve(ctx context.Context) {

....

....

serverHandler{c.server}.ServeHTTP(w, w.req)

....

....

...

}

Bingo, from the above code block on the package source, the serve() method initializes a serverHandler struct which has a ServeHTTP() method. And if we go through the ServeHTTP() code, there is an if condition that checks if the handler passed is nil or not. If its nil, it sets the handler to a global variable DefaultServeMux. So now i’m sure that the http.HandleFunc() is somehow using this gloabl variable. Let’s see if we can find out the last piece of the puzzle too.

type ServeMux struct {

mu sync.RWMutex

m map[string]muxEntry

hosts bool // whether any patterns contain hostnames

}

....

....

var DefaultServeMux = &defaultServeMux

var defaultServeMux ServeMux

....

....

func HandleFunc(pattern string, handler func(ResponseWriter, *Request)) {

DefaultServeMux.HandleFunc(pattern, handler)

}

....

....

func (mux *ServeMux) ServeHTTP(w ResponseWriter, r *Request) {

...

...

}

So the package initializes the global variable DefaultServeMux to an empty defaultServeMux struct of type ServeMux. And the ServeMux does has a ServeHTTP() method which is mandatory for any http Handler interface (like i mentioned in the starting of the blog).

Now we know how http.HandleFunc() function works. This function simply takes a route pattern and a handler function as an argument. So whenever a request matches this route, the server will execute the handler function.

The other generic way is to use http.Handle() function directly and passing nil handler to the ListenAndServe() function. Unlike the HandleFunc(), for Handle(), we pass a pattern and a handler of type Handler, which means we can pass any struct that exposes a ServeHTTP() method. Let’s see if that is correct or not.

package main

import (

"fmt"

"net/http"

)

type testHandler struct{}

func (t *testHandler) ServeHTTP(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandler ServerHTTP"))

return

}

func main() {

http.Handle("/", &testHandler{})

if err := http.ListenAndServe(":8080", nil); err != nil {

fmt.Println("Stopping web server :%v", err)

}

}

In the above example, i simply created a struct called testHandler and it exposes a method called ServeHTTP(), which is the main requirement for the http Handler type. Let see if this works or not.

$ go run simple2.go

$ curl localhost:8080/

Hello World from testHandler ServerHTTP

Bingo, That works. So we basically can use any struct that exposes a ServeHTTP() method as Handler type. In both the examples, we are indirectly modifying the global variable DefaultServeMux, either setting a handler to it or by setting a specific handler function. And everytime any request matches the route pattern, the server will pass the request to the handler associated.

Now what if we want to use diffferent handlers for different route pattern ? May be calling these functions multiple times with different patterns and handlers ? Let’s try it out with a simple program.

package main

import (

"fmt"

"net/http"

)

type testHandler1 struct{}

func (t *testHandler1) ServeHTTP(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandler1 ServerHTTP"))

return

}

type testHandler2 struct{}

func (t *testHandler2) ServeHTTP(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandler2 ServerHTTP"))

return

}

func testHandlerFunc1(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandlerFunc1"))

return

}

func testHandlerFunc2(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandlerFunc2"))

return

}

func main() {

http.Handle("/test_handler1", &testHandler1{})

http.Handle("/test_handler2", &testHandler2{})

http.HandleFunc("/test_handler_func_1", testHandlerFunc1)

http.HandleFunc("/test_handler_func_2", testHandlerFunc2)

if err := http.ListenAndServe(":8080", nil); err != nil {

fmt.Println("Stopping web server :%v", err)

}

}

In the above simple program, we register 4 different routes, 2 with Handle() and 2 with HandleFunc(). Lets test this out.

$ go run simple3.go

$ curl localhost:8080/test_handler1

Hello World from testHandler1 ServerHTTP

$ curl localhost:8080/test_handler2

Hello World from testHandler2 ServerHTTP

$ curl localhost:8080/test_handler_func_1

Hello World from testHandlerFunc1

$ curl localhost:8080/test_handler_func_2

Hello World from testHandlerFunc2

Bingo. That also works. So we definitely can register multiple routes by calling the Handle()/HandleFunc() multiple times. Now this again made me curious to investigate how the http package handles them. From our initial invesitgation, we found that both Handle() and HandleFunc() uses the global variable DefaultServeMux under the hood. Let’s see how the http package registers and handles multiple routes.

Handle and HandleFunc

First, lets check the source and see what both these function does.

func (mux *ServeMux) HandleFunc(pattern string, handler func(ResponseWriter, *Request)) {

mux.Handle(pattern, HandlerFunc(handler))

}

func Handle(pattern string, handler Handler) { DefaultServeMux.Handle(pattern, handler) }

func HandleFunc(pattern string, handler func(ResponseWriter, *Request)) {

DefaultServeMux.HandleFunc(pattern, handler)

}

From the above code block, Handle() calls the Handle() method of DefaultServeMux global variable and registers the handler for the pattern that was passed as the argument. But on the other hand, HandleFunc() calls the HandleFunc() of DefaultServeMux global variable which inturn is calling the Handle() method of the DefaultServeMux itself.

But wait, the DefaultServerMux’s Handle() method only takes a handler and we have a function. The only notable difference is that the function is wrapped with a HandlerFunc() while passing to the Handle(). So definitely that function should be converting our function to a http handler. Let’s see if that does the same.

// The HandlerFunc type is an adapter to allow the use of

// ordinary functions as HTTP handlers. If f is a function

// with the appropriate signature, HandlerFunc(f) is a

// Handler that calls f

type HandlerFunc func(ResponseWriter, *Request)

func (f HandlerFunc) ServeHTTP(w ResponseWriter, r *Request) {

f(w, r)

}

Bingo, HandlerFunc is a function type and it also exposes a ServerHTTP() method. Ha that satisfies the requirement for a http Handler interface. And the ServerHTTP() simply calls the receiver, in our case the handler function that we pass. So the HandlerFunc() simply accepts a function and convert it into a http Hanlder that has a ServerHTTP()

So in the end http.HandleFunc() already has a ServeHTTP() method, and the http.HandleFunc() is also getting its own ServeHTTP() method under the hood.

Custom ServeMux

In all the above examples, we either used http.Handle() or http.HandleFunc() and we always passed nil as the handler to the ListenAndServe(). And we also found that there is an if condition which checks if the handler is nil, and if it is, it then uses the DefaultServeMux global variable, else it will use handler directly. We also found that we can call the http.Handle() and http.HandleFunc() multiple times to register multiple routes to use its own handlers. It is possible because DefaultServeMux had a Handle() method that registers all the routes and its corresponding handlers. For each request, the server then identifies the most matching route from the registered routes and executes the corresponding handler associated with it which in turn calls the ServeHTTP() method associated with handler.

But now imagine a scenario where we need to register multiple routes which has its own handler, and we also need a middleware function like rate limiter for these routes. We can use the http.Handle() or http.HandleFunc() to register all the routes. But once we call the ListenAndServe(), the server will start processing the incoming requests. And there is no way to implement a rate limiter in this flow. Technically, we first accept the request and then passes the request to our rate limiter. The rate limiter then decides whether to throttle the request or not. if the request is throttled then we actually dont pass the request to the original request handler and we can simply return a 429 response. And if the request is safe to be processed, then only we need to pass the request to the original requests handler.

To handle such scenarios, the net/http package provides us a way to create custom Serve Mux for our webserver. In our initial investigation, we saw that GO initializes the DefaultServeMux global variable which is basically a server Mux. First, lets see how we can use ServeMux.

package main

import (

"fmt"

"net/http"

)

type testHandler1 struct{}

func (t *testHandler1) ServeHTTP(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandler1 ServerHTTP"))

return

}

type testHandler2 struct{}

func (t *testHandler2) ServeHTTP(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandler2 ServerHTTP"))

return

}

func testHandlerFunc1(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandlerFunc1"))

return

}

func testHandlerFunc2(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandlerFunc2"))

return

}

func main() {

// Our custom Server Mux

mux := http.NewServeMux()

mux.Handle("/mux/test_handler1", &testHandler1{})

mux.Handle("/mux/test_handler2", &testHandler2{})

mux.HandleFunc("/mux/test_handler_func_1", testHandlerFunc1)

mux.HandleFunc("/mux/test_handler_func_2", testHandlerFunc2)

if err := http.ListenAndServe(":8080", mux); err != nil {

fmt.Println("Stopping web server :%v", err)

}

}

In the above example code, instead of using http.Handle()/http.HandleFunc() (in other words DefaultServeMux), we are going to use create a custom serve mux. And registers all the routes to our custom serve mux. We are then passing our custom serve mux handler onto the ListenAndServe() function. Let see if our example code works or not.

$ go run mux.go

$ curl localhost:8080/mux/test_handler1

Hello World from testHandler1 ServerHTTP%

$ curl localhost:8080/mux/test_handler2

Hello World from testHandler2 ServerHTTP%

$ curl localhost:8080/mux/test_handler_func_1

Hello World from testHandlerFunc1%

$ curl localhost:8080/mux/test_handler_func_2

Hello World from testHandlerFunc2%

Bingo, That works perfectly. Now lets see how we can use the custom serve mux to wrap into a simple rate limiter.

package main

import (

"fmt"

"net/http"

)

type testHandler1 struct{}

func (t *testHandler1) ServeHTTP(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandler1 ServerHTTP"))

return

}

type testHandler2 struct{}

func (t *testHandler2) ServeHTTP(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandler2 ServerHTTP"))

return

}

func testHandlerFunc1(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandlerFunc1"))

return

}

func testHandlerFunc2(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World from testHandlerFunc2"))

return

}

func muxWrapper(handler http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

fmt.Println("Inside customMuxWrapper ...")

// Now do the rate limiting stuff

fmt.Println("Going to proceed the request ...")

handler.ServeHTTP(w, r)

})

}

func main() {

// Our custom Server Mux

mux := http.NewServeMux()

mux.Handle("/mux/test_handler1", &testHandler1{})

mux.Handle("/mux/test_handler2", &testHandler2{})

mux.HandleFunc("/mux/test_handler_func_1", testHandlerFunc1)

mux.HandleFunc("/mux/test_handler_func_2", testHandlerFunc2)

customMuxWrapper := muxWrapper(mux)

if err := http.ListenAndServe(":8080", customMuxWrapper); err != nil {

fmt.Println("Stopping web server :%v", err)

}

}

In the above example, we uses a custom serve mux via http.NewServeMux() and then registers all our routes. And then we wrap the serve mux with a simple wrapper function which basically takes the incoming request and then its passed to the appropriate handler’s ServeHTTP() method. Before the ServeHTTP() method is being called i just added two simple print commands, but technically thats the place where we should be adding our rate limiting logic or any middleware logic that we need to implement for all our routes. So the wrapper is actually acting as a middleware before the request is being passed on to the real handler. So everytime when the serve recieves a request, it first passes the request to our wrapper handler. And then the wrapper performs the necessary checks.

Conclusion

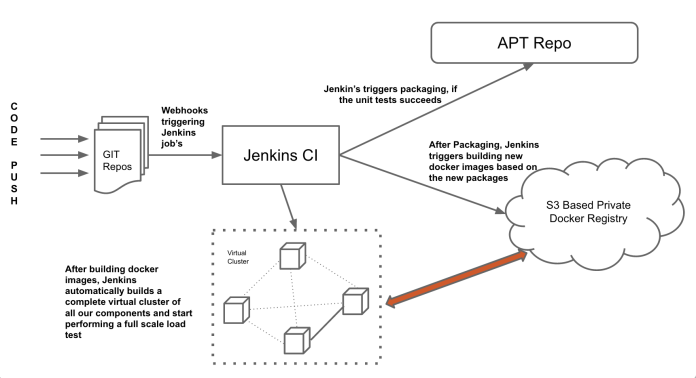

The net/http packages provides a lot of options for building webserver. And by using the custom serve mux we can easily expand and make the design more pluggable with middleware logics. GO webservers already proved that they are high efficient and now many companies are switching to GO based webservers because of their effieciency. And the GO binaries are just one single static binaries, so we don’t have to worry about packaging dependencies :). In my work, i’m currently using google’s bazel for building my go binaries